Chunk, cite, clarify, build: A content framework for AI search

Search has crossed a tipping point.

Generative AI is replacing traditional ranking systems with probabilistic, intent-based retrieval.

Content is no longer competing for positions – it’s competing to be cited, synthesized, and surfaced by language models.

To stay visible, content must be clear, credible, and machine-readable by design.

The generative front door: Rethinking content for AI-led search

The traditional, deterministic model of classic information retrieval (the “10 blue links”) is being replaced by probabilistic, generative information retrieval based on searcher intent.

AI agents, Google’s AI Overviews, and AI-native search modes are rapidly growing, becoming the new “front door” to information discovery. This shift is sending higher-quality referral traffic to websites over time.

Succeeding in AI-first search requires a mindset shift – from traditional SEO to generative engine optimization (GEO).

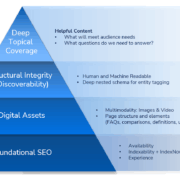

The image below shows the adjustments that need to happen to get GEO-ready.

One direct result of this change: Your content strategy needs to adapt.

Content now needs to focus on audience intent, ensuring it covers all relevant topics and subtopics.

It should be broken down into smaller pieces, like text, images, PDFs, and videos, while maintaining consistency and multimodality.

Dig deeper: Visual content and SEO: How to use images and videos in 2025

Your content should also demonstrate strong E-E-A-T and remain consistent across all channels, adjusting based on the evolving needs and intent of your audience.

To prepare your content for AI-native search, you’ll need to take a different approach.

Chunk, cite, clarify, build: A framework for AI-first indexing

Search has crossed a tipping point.

Conversational experiences are becoming the new normal, from ChatGPT and Google’s AI Overviews to Perplexity snapshots and soon, a wave of AI “modes” baked into every major search platform.

These systems no longer display information, but instead synthesize it, remixing multimodal content into a fluid conversation.

For publishers and marketers, the implications are profound:

- Probabilistic retrieval over deterministic ranking: Instead of static positions on a page, content now competes in a probability-weighted lottery of answer generation.

- AI chat serves as the new front door: Early data shows AI surfaces can increase referral traffic, but only to sources the models deem authoritative.

- Traditional SEO signals are necessary but not sufficient: Visibility hinges on how well your content feeds large language models (LLMs) – both technically and semantically.

To thrive, we need a roadmap built for this AI-first index.

Enter the “chunk, cite, clarify, build” framework.

This process helps content survive tokenization, earn citations, and remain unambiguous under machine interpretation.

Chunk your content for optimal AI consumption

- How LLMs read

- Crawlers used to parse HTML tags; LLMs ingest raw text, break it into tokens, and map semantic relationships. They reward clarity, coherence, and context.

- The 100-to-300-word chunk

- Think of each chunk as a self-contained mini-article:

- One dominant idea is stated plainly.

- Supporting facts such as images, PDFs, or examples are close by.

- Enough context that it still makes sense if quoted in isolation.

- Content that is connected with a main topic and related subtopics.

- Retrieval-augmented generation (RAG) systems – from Google’s AI Overviews to Perplexity – love these bite-size knowledge nuggets.

- Think of each chunk as a self-contained mini-article:

- Heading hierarchy and short paragraphs

- Use a logical H1 → H2 → H3 structure and keep paragraphs tight (one idea each).

- This scaffolding guides models to the right passage and reduces the risk of burying key points.

- Structured formats as goldmines

- Lists, tables, and FAQ blocks provide explicit boundaries and make it trivial for AI to lift answers verbatim.

- Front-load insights, minimize noise

- Place definitions, takeaways, and data early.

- Avoid pop-ups or intrusive CTAs that add “pollution” to the chunk.

- Prefer comprehensive hubs

- A single, in-depth page that covers an entire topic landscape often beats a scattershot of thin posts.

- This helps AI satisfy multiple queries without jumping domains.

- A solid technical foundation

- Ensure your website loads fast, that your platform offers clean code, and allows for full indexing, crawling, and easy rendering.

- A technically sound website lays the foundation for everything that follows.

Cite for credibility and trust signals

- E-E-A-T evolves

- Experience, expertise, authoritativeness, and trustworthiness now apply to both humans and machines.

- Demonstrable first-hand experience is a differentiator AI cannot fabricate. This includes:

- Adding content created by experts.

- Including fresh and relevant content.

- Leveraging user-generated content.

- Authoritative sourcing

- Inline citations from .gov, .edu, or respected industry bodies.

- Visible author bylines with credentials (and even a short anecdote).

- Note where AI assisted to reinforce transparency.

- Brand mentions as ranking fuel

- LLMs weigh external validation – social buzz, reviews, and Reddit threads.

- Stimulate conversation in communities your audience trusts.

- Keep claims and evidence together

- Place statistics, quotes, or links in the same 100-300-word unit as the assertion they support.

- Separation risks the model retrieving the claim without the proof.

- Vigilance against hallucinations

- Monitor how AI describes your brand.

- If you spot inaccuracies, publish clarifying content and submit feedback – the correction chunk then becomes authoritative source material.

- AI is always learning from short-term and long-term data. It’s critical for brands to:

- Centralize all of their data.

- Place proper guardrails for consistency to avoid hallucinations.

Dig deeper: 7 steps to scale your content creation process in the world of AI

Clarify for semantic precision and AI understanding

- Semantic clarity

- Focus on answering the implied question unmistakably.

- A well-defined concept and well-connected topic, with related subtopics outranks a keyword-dense paragraph every time.

- For example, if you’re writing content on a specific topic, think about all the related subtopics people might search for and make sure you cover them.

- Internal linking, citations, and using different types of content all play a big role in making your content more effective.

- Intent alignment and query fan-out

- Google’s AI Mode spins a seed query into dozens of related questions.

- Your page should pre-empt logical follow-ups:

- Definitions.

- Comparisons.

- Pros/cons.

- Costs.

- Timelines.

- How-to questions.

- Readability matters

- An 8th- to 11th-grade reading level consistently earns more citations in AI summaries.

- Plain language improves both human engagement and machine parsing.

- Write for machine precision

- Use:

- Active voice: “Our study shows…”

- Clear agent–action–object: “Apple Inc. (the tech company) acquired Beats.”

- Transitional cues: “However,” “Because,” “Therefore.”

- These micro-signals help the model map cause, effect, and emphasis.

- Use:

- Prepare for personalization

- Because AI answers adjust to user embeddings, be sure to serve multiple personas with:

- Varied use cases.

- Examples.

- Jargon translations within the same hub page.

- Because AI answers adjust to user embeddings, be sure to serve multiple personas with:

Dig deeper: How to boost your marketing revenue with personalization, connectivity and data

Build your knowledge graph in the AI-first index

- Schema as reinforcement, not a shortcut

- Add Article, HowTo, FAQPage, or Product markup.

- It won’t fix messy prose, but it does boost confidence that machines understand the on-page structure.

- Internal linking and content clusters

- Treat your site like a graph: related pages should link to each other, highlighting topical authority while preventing orphaned content.

- Search everywhere optimization – multi-modal and channel agnostic

- Make sure the same core ideas and content formats are up-to-date across platforms (basically, anywhere LLMs pull their info from), such as:

- YouTube.

- LinkedIn.

- Reddit.

- Industry forums.

- Consistency is key. Your content strategy should be consistent, relevant, and in tune with audience intent.

- Make sure the same core ideas and content formats are up-to-date across platforms (basically, anywhere LLMs pull their info from), such as:

- The inverse customer journey

- AI may introduce users to your brand first, prompting a follow-up branded Google search for validation.

- Track branded search volume as an AI visibility KPI.

- New success metrics

- Citations in AI Overviews or chat answers.

- Attributed influence value (AIV) for assisted conversions.

- Engagement depth (scroll, dwell).

- Growth in branded search and knowledge panel coverage.

- Secure your knowledge panel

- Claim and enrich your Google Knowledge Panel (or Bing Entity Pane).

- LLMs tap that structured profile when composing answers – own it before someone else shapes it for you.

Dig deeper: Integrating SEO into omnichannel marketing for seamless engagement

Align your content strategy with site architecture

LLMs evaluate not just what you publish but where it lives in your URL tree.

Misaligned architecture can dilute authority signals and maroon valuable chunks.

With AI search, your website becomes a data hub, so it’s super important to make sure that the data can be easily retrieved and understood by AI.

Mirror topic clusters in navigation

Primary nav labels → landing-page hubs → subtopic articles. This pyramid hierarchy ensures internal links flow downhill from hubs to leaf pages, reinforcing topical authority.

Consistent URL semantics

Use short, descriptive slugs (e.g., /ai-content-framework/chunk-cite-clarify) that echo H1s. Avoid deep nesting that buries key pages (/blog/2025/07/03/random-slug).

Chunk boundaries ≈ page boundaries

When a topic outgrows a clean 2-3 scroll chunk count, graduate it to its own page and link back with a clear relational anchor (“Read our deep dive on multimodal optimization.”). This keeps each page thematically tight for AI retrieval.

Breadcrumbs and facets for context

Well-structured breadcrumbs give models a second way to understand hierarchy. Filters (industry, persona, product tier) expose cross-page relationships that machines can crawl easily.

Performance and cohesion

A lightning-fast, schema-rich hub page feeding slow, bloated child pages creates mixed signals. Optimize templates globally – core vitals, accessibility, mobile – so every part of your site meets the same technical bar.

Preparing content for LLMs: A 5-step readiness checklist

How should brands prepare content for LLMs? Here’s a five-point checklist to ensure your content is ready.

1. Audit the content

- Check visibility, rankings, and how (or if) the page is cited in Google AI Overviews, ChatGPT, Perplexity, etc.

- Track content decay, which gives a baseline to measure impact.

- Look for topical gaps, unanswered user questions, thin spots, and outdated facts – grammatical errors, plagiarism, etc.

2. Revise content to have one clear intent per page

- One H1 that reinforces the main topic of the page in plain language.

- Clear meta data that aligns with the main topic.

3. Chunk and label the content

- Break long blocks into scannable sections (H2/H3), bullet lists, tables, and a short TL;DR/FAQs.

- Keep each “chunk” self-contained so LLMs can quote it without losing context. One idea per chunk.

4. Enrich with real expertise

- Fill in topical gaps by expanding on related topics

- Add fresh data, firsthand examples, or expert quotes – things AI can’t easily synthesize on its own.

- Cite and link to primary sources right in the text to boost credibility.

5. Layer on machine-readable signals

- Insert or update schema markup (FAQPage, HowTo, Product, Article, etc.).

- Use clear alt descriptions and file names for images.

Publish, monitor, and measure impact

Track how your content performs across AI-native search.

Run an LLM visibility audit, monitor traffic from different platforms, and compare branded vs. non-branded prompts.

Review LLM citations to gauge your presence and influence in AI-generated results.

Relevance engineering for the future of search

SEO is mutating into relevance engineering – the craft of making information intelligible, trustworthy, and context-rich for both people and machines wherever search happens.

In an AI-first index, success belongs to brands that:

- Chunk information into digestible, self-contained insights.

- Cite sources and authorship to radiate credibility.

- Clarify meaning so unmistakably that hallucination becomes impossible.

- Build a knowledge graph by connecting entities in their content with schema to help AI engines understand your business better.

Your website serves as a data hub, so your content needs to align with your audience.

Brands should also adopt a channel-agnostic strategy to reach users wherever they are.

Apply these principles consistently, and your content becomes a trusted source AI systems cite, recommend, and route users through – from discovery to conversion.

Thanks to David Banahan and Tushar Prabhu for helping shape this framework.